By Paddy Clark – Senior Test Engineer Consultant at Nimble Approach

In the fast-paced world of software development, efficiency and productivity are key. Writing code, especially repetitive or boilerplate sections, can often be time-consuming and tedious. That’s where GitHub Copilot comes into the picture – an AI-powered code generation tool developed by GitHub and OpenAI.

Copilot aims to revolutionise the way developers write code by offering intelligent suggestions and automating the process of generating code snippets, from simple functions to complex algorithms.

Below I’m going to explain my experience with GitHub Copilot as a Test Engineer, from a general use point. But looking more specifically at its ability to help with writing tests. It should be noted that as GitHub Copilot is a rapidly evolving tool, some of the points here may quickly become outdated (June 2023).

Copilot’s Current Features

It’s important to understand what Copilot is, and what it isn’t (at the time of writing).

Note: The below code demos are at x2 speed.

Single code suggestions.

This gives Copilot’s top suggestion, to fill in on the same, next or multiple lines below. It can be anything from autocompleting the variable name to writing the entire method.

Multiple alternative suggestions.

Same as above, but displays up to 10 different suggestions, these open in a new temporary file.

Generating code suggestions from comments.

Here you can describe something you want to do using natural language within a comment, and Copilot will provide a suggestion(s).

General Usability

Before looking specifically at writing tests, I’ve summarised my general experience with Copilot. I’ve tried to keep these as objective as possible, as a lot of it is really personal preference.

- ✔ Boilerplate code suggestions are great. In general, for tasks of a repetitive nature, Copilot gets it right the majority of the time.

- ✔ It helps write functions. If you are unfamiliar with a language or want to speed up writing a function, then it can quickly suggest algorithms. The caveat here is that it’s important you understand what is going on, although in some instances it can help you learn.

- 4 IDEs supported. Currently limited to 4 IDEs

- JetBrains IDEs (Beta)

- Neovism

- Visual Studio

- Visual Studio Code

- 11 ‘core’ languages supported. View breakdown here.

- ❌ It’s not that fast. Multiple extended options (usually generating 10 suggestions) can take a long time (~30 secs). Quite often this would surpass the time I am prepared to wait.

- ❌ ChatGPT 4 is a lot more powerful, at present, than Copilot. It has better suggestions; generally down to having a better understanding of the current state of the code. GitHub Copilot chat should rectify this, but it is worth noting that Copilot is a bit behind vs other AI offerings.

- ❌ The scope for suggestions is limited. The more context you give Copilot, the better it is. It only suggests the following line, code block or function. Refactoring, suggesting other classes/files for code, and formatting are a few key features that Copilot doesn’t suggest.

- ❌ Non-deterministic. Sometimes getting different suggestions for the same scenario.

- ❌ It’s not perfect. It can occasionally write some functions / logic incorrectly and can even add bugs. Also, it won’t always write the most efficient code.

Usability for Writing Tests

In this section, I’m looking more specifically at Copilot’s accuracy, efficiency, and effectiveness in writing UI, API and Unit tests. From generating UI test cases to validating API endpoints and crafting unit test scenarios, I’ll analyse the pros and cons of this AI-powered tool.

UI Tests

UI Example 1 – TypeScript – Cypress:

This example shows a Cypress project, with a well established test suite. The ‘Auth’ tests shown have numerous pre-existing Cypress scenarios written out. We can see here how Copilot fares at writing out a further scenario.

- Copilot suggests a legitimate test scenario given the context of the existing tests (albeit, there are very similar existing tests to the suggested test).

- The login details are correctly inferred.

- The selectors and assertions are correctly inferred.

- This is a very trivial scenario.

UI Example 2 – TypeScript – Cypress:

In a different use case now, we see how Copilot deals with changes in the codebase. This is a really basic scenario where we change the text of an element that is being tested and see if Copilot can recognise the change.

- Copilot fails to immediately suggest the correct assertion.

- Even after partially typing the assertion, the end suggestion is actually incorrect, as homepage is not capitalised.

- The user has to go to the line of code and edit it to prompt the new suggestions. Shows that the tool has a little way to go here.

- Shows the lack of understanding Copilot has between the tests and the development code.

API Tests

API Example 1 – JavaScript – SuperTest:

In this example, the repo only has this file in (i.e. testing only codebase, the service is hosted elsewhere) & there is only the above happy path tests that does a GET for all airlines /airlines & a GET for valid airline /airlines/1.

- Suggestions are very quick.

- The assertions, although not necessarily all correct, are very plausible suggestions.

In the same project, but for the POST method to create an airline, you can see how Copilot gets on with suggesting all the tests for the POST method from scratch.

Summary:

- Structure / Code. Generally good. The 1 issue is that the assertions should really be variables as they are repeated twice.

- Assertions. Very good.

- Suggestions. Could be quicker here. A good choice of suggestions, all feasible.

Unit Tests

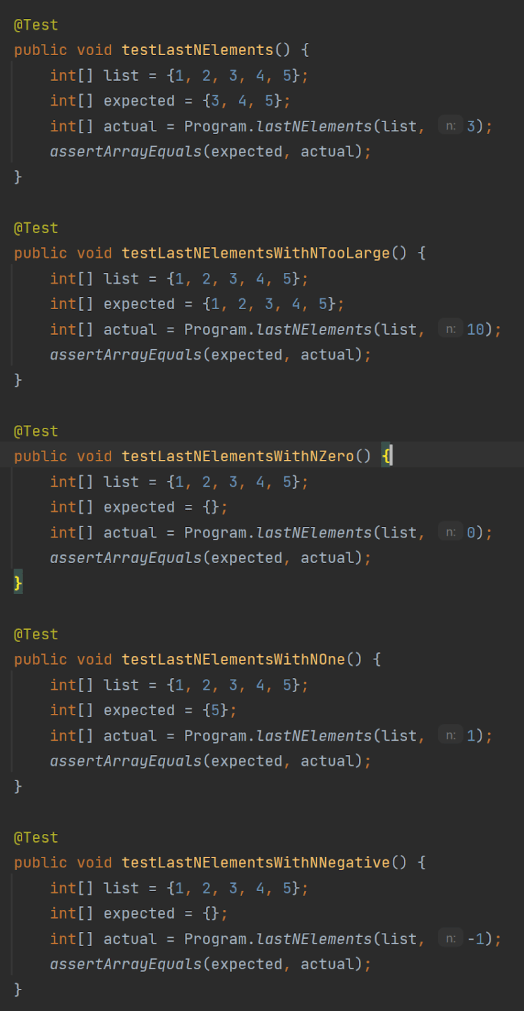

Unit Test Example 1:

Here I created a very basic function in Java to return the last N elements. I used the ‘generate from comments’ functionality to create the method; as you can see it has not done the most efficient, elegant logic, nor does it handle all possible values of n, but I’m not looking at criticising this, as a developer has more expertise to analyse Copilot’s ability to write code.

The tests were generated by creating the class, importing JUnit then using the ‘multiple suggestions’ functionality to generate (in this case) 10 suggestions. I chose the 2nd option in the list; the first option was very similar, but frustratingly named the tests numerically (testLastNElements1, …2, …).

Summary:

- Structure / code. The tests are all structured well & easy to read or interpret. I would say this is very similar to how I would structure the tests myself. There is a lot of repeated code, so some of this could be abstracted out / set globally.

- Scenarios. The 5 scenarios chosen are excellent. It includes boundary testing of ‘n’ and has a good balance of covering enough variations of ‘n’ without including any unnecessary cases. The main point here is that Copilot has failed to write any test cases around the size of the array. Any tester / developer would know to include a case with an empty list. You could also argue that there should be a variation of lists used, whereas Copilot uses the same list for every scenario.

- Test case naming. The test names are all comprehensible, although perhaps not perfect. The one caveat here is that as I mentioned before, the default prediction was to just name each test with a number at the end, which is pretty useless to anyone reviewing the failed tests.

Usability Summary

Having used Copilot for writing tests for a little while, and based on the above evidence, there are a few general trends that I’ve picked up on. Generally it seems to perform better at writing unit and api tests, whereas UI E2E tests it struggles with the most. It tends to perform better the more context you give it (in the current file), however context outside of the current file it seems unable to acknowledge. A very common scenario when maintaining tests, is that the tests need to be updated based on changes to the production code. At present, Copilot is unable to make the connection between the tests and code. This seems to be consistent across any level of testing. One of the more impressive points was Copilot’s ability to suggest test scenarios, in a human readable format. My overall impression was that the tool wasn’t quite there yet, particularly as it often led me down the wrong path.

Risks and Concerns

As with all recent products linked to OpenAI large language models, there are a number of ethical and legal issues surrounding Copilot. It is important that any user of generative AI tools are aware of the risks that come with them.

- There are a number of copyright infringement claims around the tool. There are numerous lawsuits raised against AI chatbots and other generative AI, so it is worth being aware that these have the potential to impact Copilot. As Copilot uses OpenAI’s Codex, a descendant of Chat GPT, any laws associated with GPT could affect Copilot.

- OpenAI have also recently been sued for defamation, showing that their generative AI tools could potentially be biassed or contain false information.

- With these current law cases raised, there is question over whether ‘your’ code will be used to train OpenAI’s large language model. According to the docs, code will not be shared unless accepted in the settings. however it is worth noting that by default, this is ticked and I would recommend this is disabled.

- Working on client projects, the code written belongs to the client – therefore it shouldn’t be used to train AI tools and potentially be reused by other users of the tool.

- Is it ethical to charge for a tool that solely uses open source code? This somewhat undermines the open source code principles.

- Copilot doesn’t show the source of its suggestions, hiding where the code originates from. As later mentioned, there are tools that offer this.

Upcoming Features

As mentioned earlier, Copilot has a number of features (or ‘previews’) lined up under the name ‘Copilot X’. Leveraging ChatGPT 4 and other deep learning models, there are a number of upcoming features that plan to go way beyond the scope of the current tool. It’s worth noting that you can join the waitlist for the majority of these previews, to test them out in Beta mode (I wasn’t actually accepted onto these until my first payment went through for Copilot). I’m not going to explain these here, as the link has more info on each.

- Copilot for pull requests.

- Copilot Chat.

- Copilot for docs.

- Copilot for CLI.

- Copilot Voice.

Competitors

I’ve just included a few of the more popular alternatives here, but there are many more out there. Without actually using any of these tools, it is difficult to give a comprehensive comparison.

- Amazon CodeWhisperer

- Free

- ✔ Shows the code reference/author. This transparency gives reassurance that code is open source and available to use.

- ✔ Includes 50 security scans a month

- Currently supports 15 languages.

- ❌ Slower to make suggestions.

- ❌ Arguably not the same level of code suggestions.

- CodeGeeX

- Free, open source.

- Currently supports 19 languages.

- ❌ Reportedly, delayed code generation

- ❌ Limited IDE support (VSCode and JetBrains IDEs).

- Tabnine

- Supports all major IDEs.

- ✔ Strong suggestions.

- ✔ Offline mode available.

- Free and pro plans available.

- Currently supports 25 languages.

Key Takeaways

I’ve tried to order these, as I think the first few are very important, whereas some points are quite subjective (as I’ve been pointing out the whole time, the tool is what you wish to make of it).

- Don’t run before you can walk. I think it is important that the user has a good fundamental knowledge of the tool in use and coding practices, as otherwise they’ll quickly lose understanding of the code. Junior dev/testers I would be very tentative with using the tool, as it will lead to bad practices along with the potential of losing a lot of the understanding of the code.

- Take it or leave it. Like many tools, it takes time to understand how it works and more importantly how it can best help you code, as there are a lot of features that currently don’t work well enough to make coding more efficient. A lot of it is personal preference, and the beauty of Copilot is that you never have to accept the suggestions, but they’re there if you need them.

- It’s very much a WIP. As mentioned, there are a number of upcoming features as part of ‘Copilot X’. Adopting it, in its present form, means we can stay up-to-date with all new features. E.g. adding yourself to the waiting list for the Copilot X features, in order to try them in Beta mode and stay ahead of the curve.

- Raised expectations. Understand that Copilot is a tool to make developing / writing code more efficient, there is no real ‘capability’ here i.e. it’s not adding anything new, simply speeding up the process. In the same way that tools such as IDEs, code formatters, etc. help.

- Writing Tests isn’t perfect. For E2E tests, particularly UI tests, there are more powerful tools out there right now, like Playwright’s Code Generator and Cypress’ Studio (albeit for a different use case). Copilot can definitely be used in conjunction with these tools to speed up the process. Copilot performs best when it has a lot of prior context. This is the same for tests. It performs well at suggesting tests that are similar to the current tests, but a slightly different scenario.

Author Bio

Paddy Clark – Senior Test Engineer Consultant at Nimble Approach

Paddy has over 5 years of experience in software testing, 3 years of experience in consulting and a degree in Computer Science.